FIFO (computing and electronics)

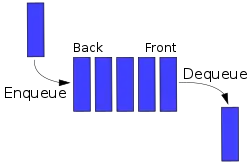

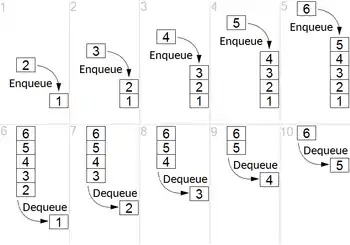

In computing and in systems theory, first in, first out (the first in is the first out), acronymized as FIFO, is a method for organizing the manipulation of a data structure (often, specifically a data buffer) where the oldest (first) entry, or "head" of the queue, is processed first.

Such processing is analogous to servicing people in a queue area on a first-come, first-served (FCFS) basis, i.e. in the same sequence in which they arrive at the queue's tail.

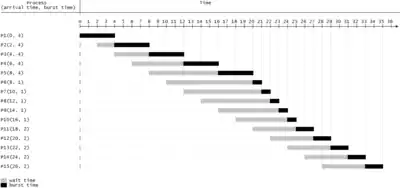

FCFS is also the jargon term for the FIFO operating system scheduling algorithm, which gives every process central processing unit (CPU) time in the order in which it is demanded.[1] FIFO's opposite is LIFO, last-in-first-out, where the youngest entry or "top of the stack" is processed first.[2] A priority queue is neither FIFO or LIFO but may adopt similar behaviour temporarily or by default. Queueing theory encompasses these methods for processing data structures, as well as interactions between strict-FIFO queues.

Computer science

Depending on the application, a FIFO could be implemented as a hardware shift register, or using different memory structures, typically a circular buffer or a kind of list. For information on the abstract data structure, see Queue (data structure). Most software implementations of a FIFO queue are not thread safe and require a locking mechanism to verify the data structure chain is being manipulated by only one thread at a time.

The following code shows a linked list FIFO C++ language implementation. In practice, a number of list implementations exist, including popular Unix systems C sys/queue.h macros or the C++ standard library std::list template, avoiding the need for implementing the data structure from scratch.

#include <memory>

#include <stdexcept>

using namespace std;

template <typename T>

class FIFO {

struct Node {

T value;

shared_ptr<Node> next = nullptr;

Node(T _value): value(_value) {}

};

shared_ptr<Node> front = nullptr;

shared_ptr<Node> back = nullptr;

public:

void enqueue(T _value) {

if (front == nullptr) {

front = make_shared<Node>(_value);

back = front;

} else {

back->next = make_shared<Node>(_value);

back = back->next;

}

}

T dequeue() {

if (front == nullptr)

throw underflow_error("Nothing to dequeue");

T value = front->value;

front = move(front->next);

return value;

}

};

In computing environments that support the pipes-and-filters model for interprocess communication, a FIFO is another name for a named pipe.

Disk controllers can use the FIFO as a disk scheduling algorithm to determine the order in which to service disk I/O requests, where it is also known by the same FCFS initialism as for CPU scheduling mentioned before.[1]

Communication network bridges, switches and routers used in computer networks use FIFOs to hold data packets in route to their next destination. Typically at least one FIFO structure is used per network connection. Some devices feature multiple FIFOs for simultaneously and independently queuing different types of information.[3]

Electronics

FIFOs are commonly used in electronic circuits for buffering and flow control between hardware and software. In its hardware form, a FIFO primarily consists of a set of read and write pointers, storage and control logic. Storage may be static random access memory (SRAM), flip-flops, latches or any other suitable form of storage. For FIFOs of non-trivial size, a dual-port SRAM is usually used, where one port is dedicated to writing and the other to reading.

The first known FIFO implemented in electronics was by Peter Alfke in 1969 at Fairchild Semiconductor.[4] Alfke was later a director at Xilinx.

Synchronicity

A synchronous FIFO is a FIFO where the same clock is used for both reading and writing. An asynchronous FIFO uses different clocks for reading and writing and they can introduce metastability issues. A common implementation of an asynchronous FIFO uses a Gray code (or any unit distance code) for the read and write pointers to ensure reliable flag generation. One further note concerning flag generation is that one must necessarily use pointer arithmetic to generate flags for asynchronous FIFO implementations. Conversely, one may use either a leaky bucket approach or pointer arithmetic to generate flags in synchronous FIFO implementations.

A hardware FIFO is used for synchronization purposes. It is often implemented as a circular queue, and thus has two pointers:

- Read pointer / read address register

- Write pointer / write address register

Status flags

Examples of FIFO status flags include: full, empty, almost full, and almost empty. A FIFO is empty when the read address register reaches the write address register. A FIFO is full when the write address register reaches the read address register. Read and write addresses are initially both at the first memory location and the FIFO queue is empty.

In both cases, the read and write addresses end up being equal. To distinguish between the two situations, a simple and robust solution is to add one extra bit for each read and write address which is inverted each time the address wraps. With this set up, the disambiguation conditions are:

- When the read address register equals the write address register, the FIFO is empty.

- When the read and write address registers differ only in the extra most significant bit and the rest are equal, the FIFO is full.

References

- Andrew S. Tanenbaum; Herbert Bos (2015). Modern Operating Systems. Pearson. ISBN 978-0-13-359162-0.

- Kruse, Robert L. (1987) [1984]. Data Structures & Program Design (second edition). Joan L. Stone, Kenny Beck, Ed O'Dougherty (production process staff workers) (second (hc) textbook ed.). Englewood Cliffs, New Jersey 07632: Prentice-Hall, Inc. div. of Simon & Schuster. p. 150. ISBN 0-13-195884-4.

{{cite book}}: CS1 maint: location (link) - James F. Kurose; Keith W. Ross (July 2006). Computer Networking: A Top-Down Approach. Addison-Wesley. ISBN 978-0-321-41849-4.

- "Peter Alfke's post at comp.arch.fpga on 19 Jun 1998".