Geometric Distribution

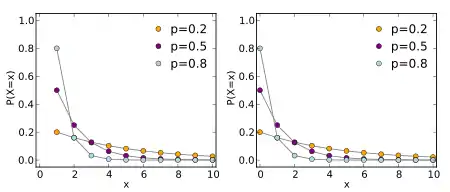

Probability mass function | |

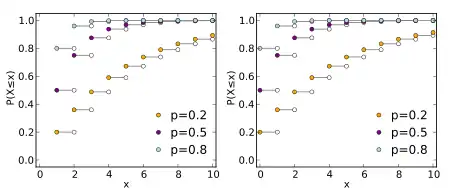

Cumulative distribution function | |

| Parameters | success probability (real) |

|---|---|

| Support | |

| PMF | |

| CDF | |

| Mean | |

| Median | (not unique if is an integer) |

| Mode | |

| Variance | |

| Skewness | |

| Ex. kurtosis | |

| Entropy | |

| MGF | , for |

| CF | |

There are two similar distributions with the name "Geometric Distribution".

- The probability distribution of the number X of Bernoulli trials needed to get one success, supported on the set { 1, 2, 3, ...}

- The probability distribution of the number Y = X − 1 of failures before the first success, supported on the set { 0, 1, 2, 3, ... }

These two different geometric distributions should not be confused with each other. Often, the name shifted geometric distribution is adopted for the former one. We will use X and Y to refer to distinguish the two.

Shifted

The shifted Geometric Distribution refers to the probability of the number of times needed to do something until getting a desired result. For example:

- How many times will I throw a coin until it lands on heads?

- How many children will I have until I get a girl?

- How many cards will I draw from a pack until I get a Joker?

Just like the Bernoulli Distribution, the Geometric distribution has one controlling parameter: The probability of success in any independent test.

If a random variable X is distributed with a Geometric Distribution with a parameter p we write its probability mass function as:

With a Geometric Distribution it is also pretty easy to calculate the probability of a "more than n times" case. The probability of failing to achieve the wanted result is .

Example: a student comes home from a party in the forest, in which interesting substances were consumed. The student is trying to find the key to his front door, out of a keychain with 10 different keys. What is the probability of the student succeeding in finding the right key in the 4th attempt?

Unshifted

The probability mass function is defined as:

- for

Mean

Let q=1-p

We can now interchange the derivative and the sum.

Variance

We derive the variance using the following formula:

We have already calculated E[X] above, so now we will calculate E[X2] and then return to this variance formula:

Let q=1-p

We now manipulate x2 so that we get forms that are easy to handle by the technique used when deriving the mean.

We then return to the variance formula